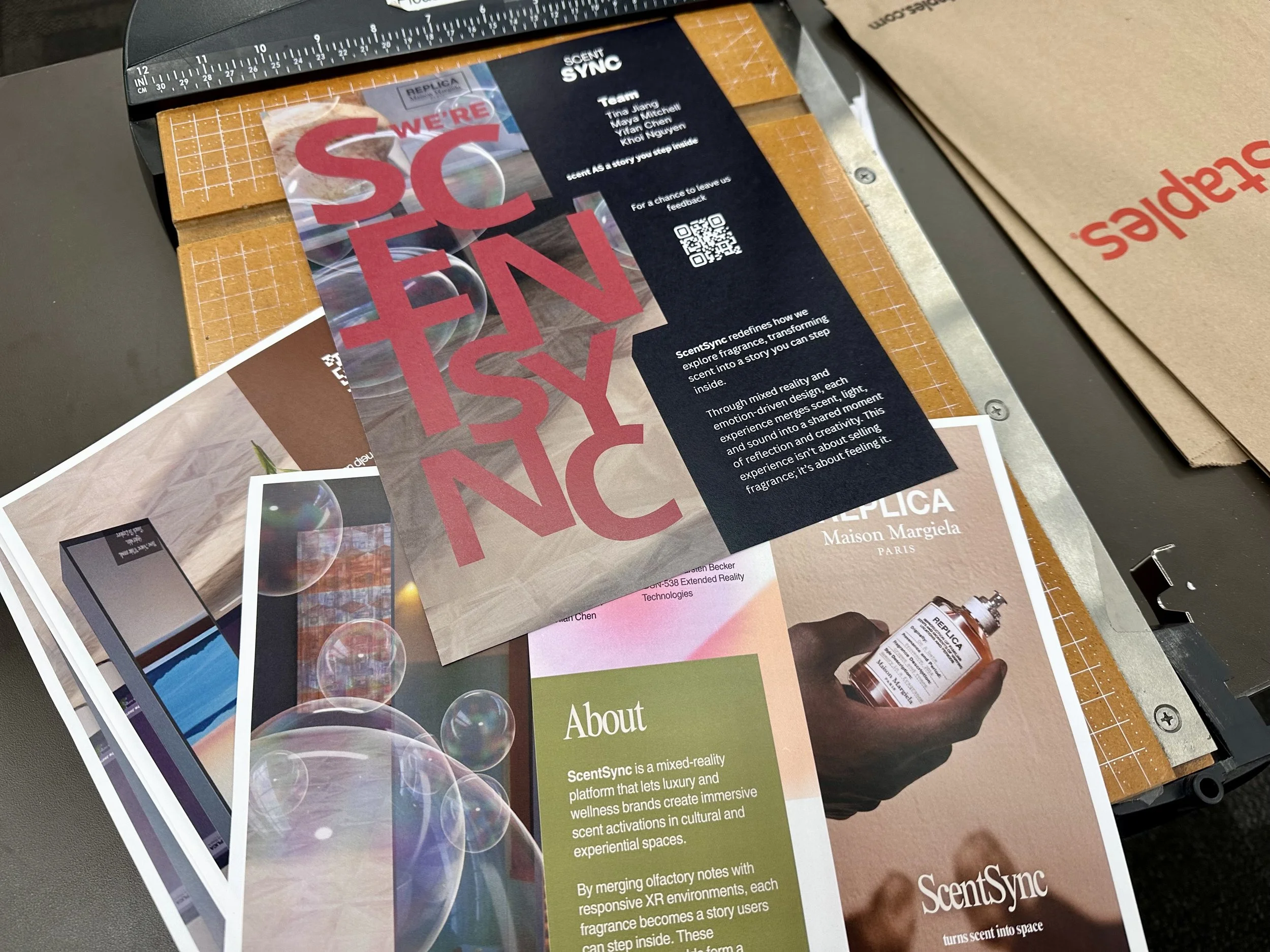

Immersive fragrance storytelling for Apple Vision ProScentSync

ScentSync is a spatial computing experience that turns fragrance notes into interactive environments — helping users understand scent through mood, symbolism, and movement instead of static descriptions.

ScentSync

2025

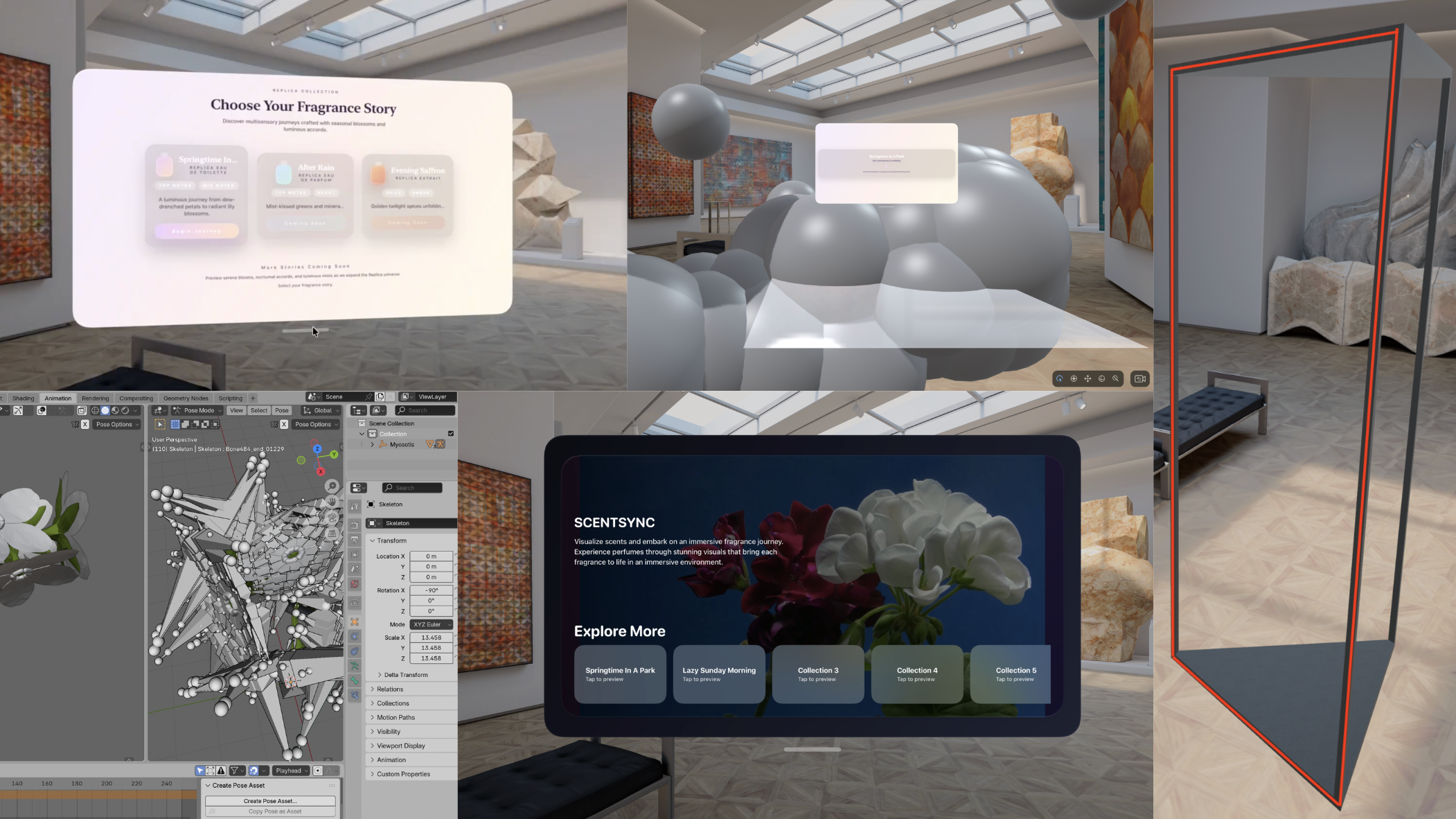

Role: Developer & 3D Immersive Builder

Platform: visionOS / Apple Vision Pro

Tools: Xcode, SwiftUI, RealityKit, Reality Composer Pro, Rhino, Blender

Team: Tina Jiang, Maya Mitchell, Yifan Chen, Khoi Nguyen

Fragrance is emotional. It’s also hard to communicate—especially online. Most digital fragrance discovery depends on marketing language (“clean,” “fresh,” “warm”) that feels vague and abstract, leaving users unsure of what a scent actually feels like.

ScentSync asks a simple question:

What if you could explore a fragrance the way you explore a place?

Spatial computing offers a new medium for sensory storytelling. Rather than describing a scent, we wanted users to step into it.

Why this project

ScentSync translates fragrance profiles into immersive worlds. Each scent becomes a spatial scene built around:

symbolic 3D objects (representing key notes),

interactive moments (touch, trigger, response),

sound + narration (to shape mood and memory).

Instead of reading notes, users discover them—through light, texture, motion, and space.

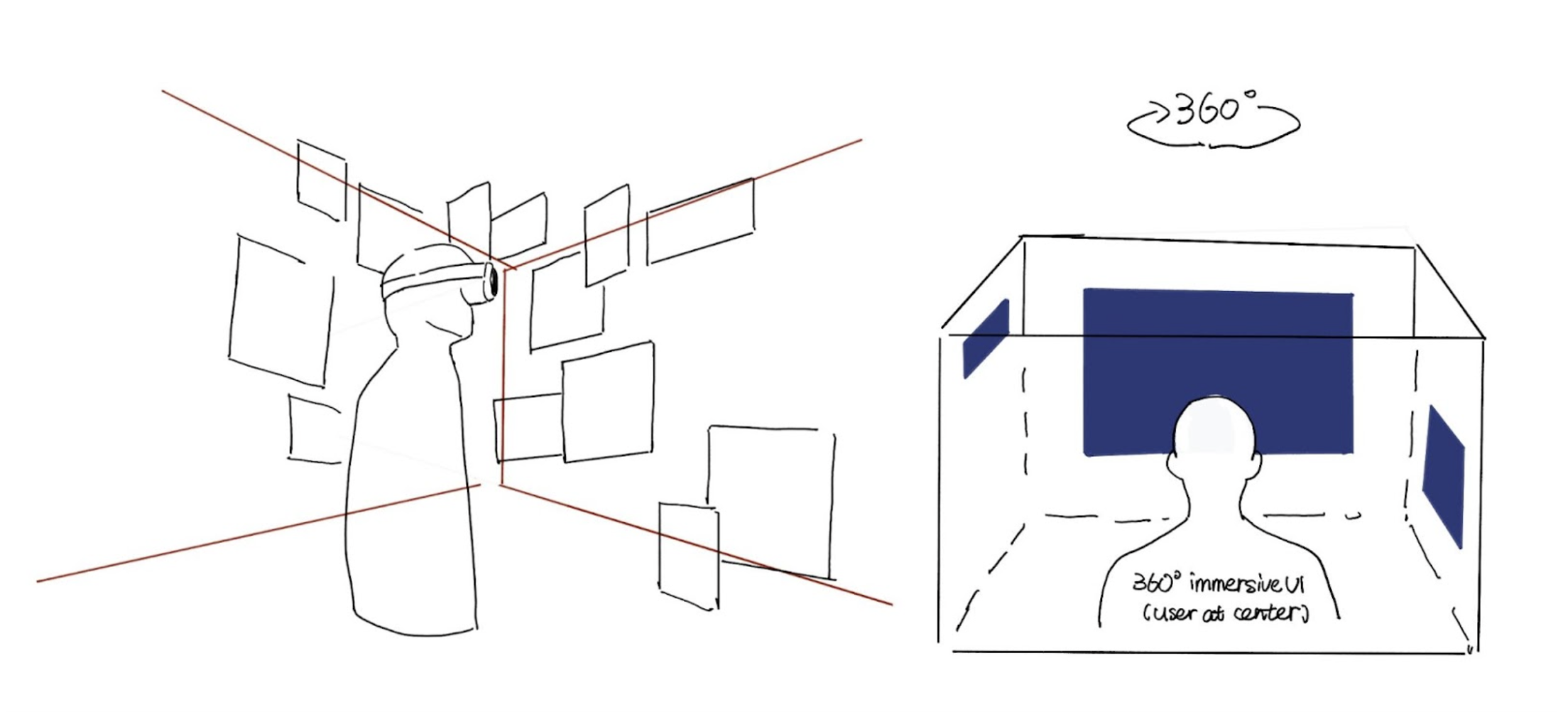

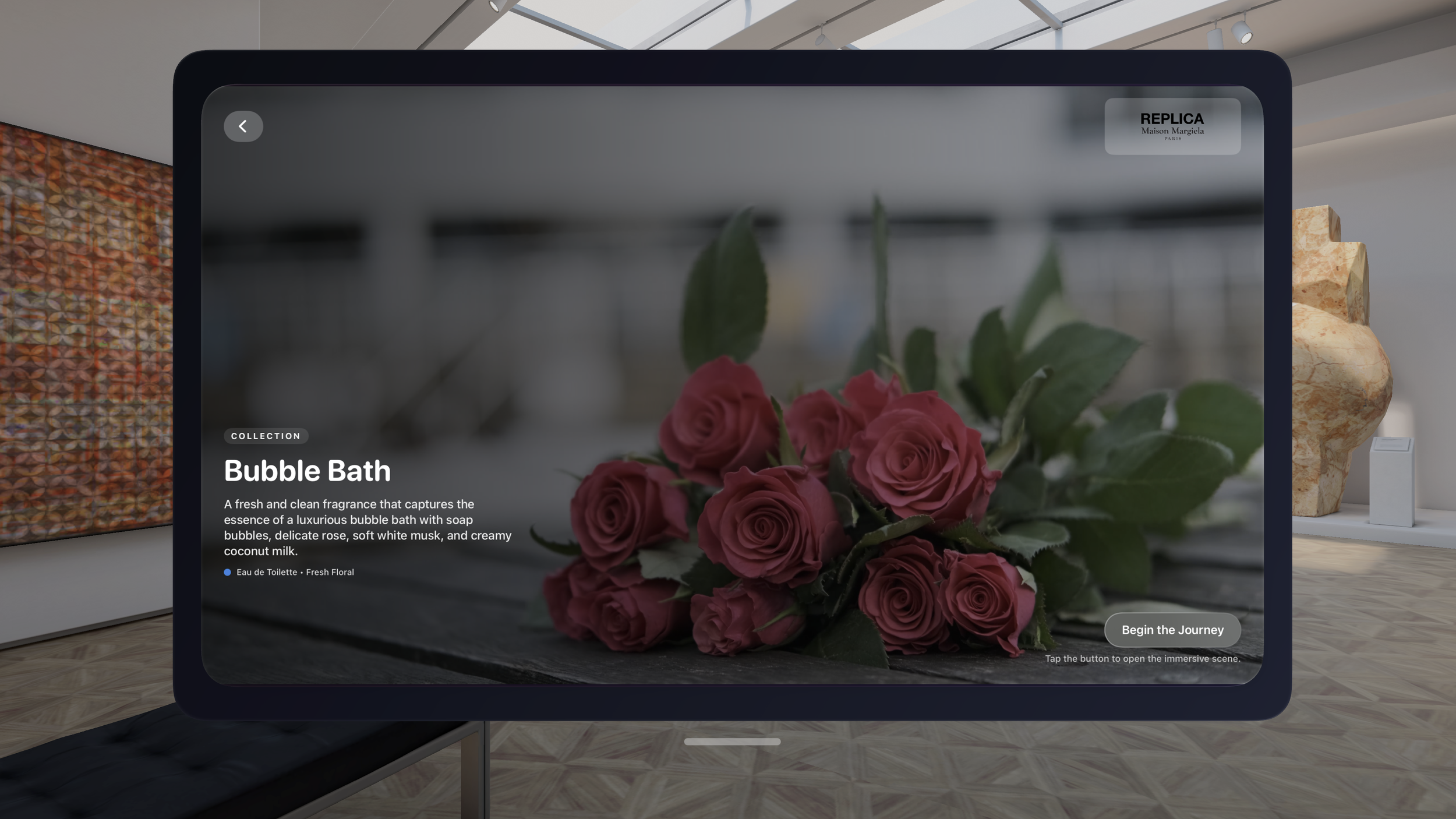

ScentSync is structured as a guided fragrance journey:

Select a fragrance from the main menu

Enter an immersive portal into a themed world

Interact with note-based objects that respond to user attention

Listen to ambient sound and narration to deepen emotional clarity

Transition between fragrance scenes seamlessly

Return and explore another scent

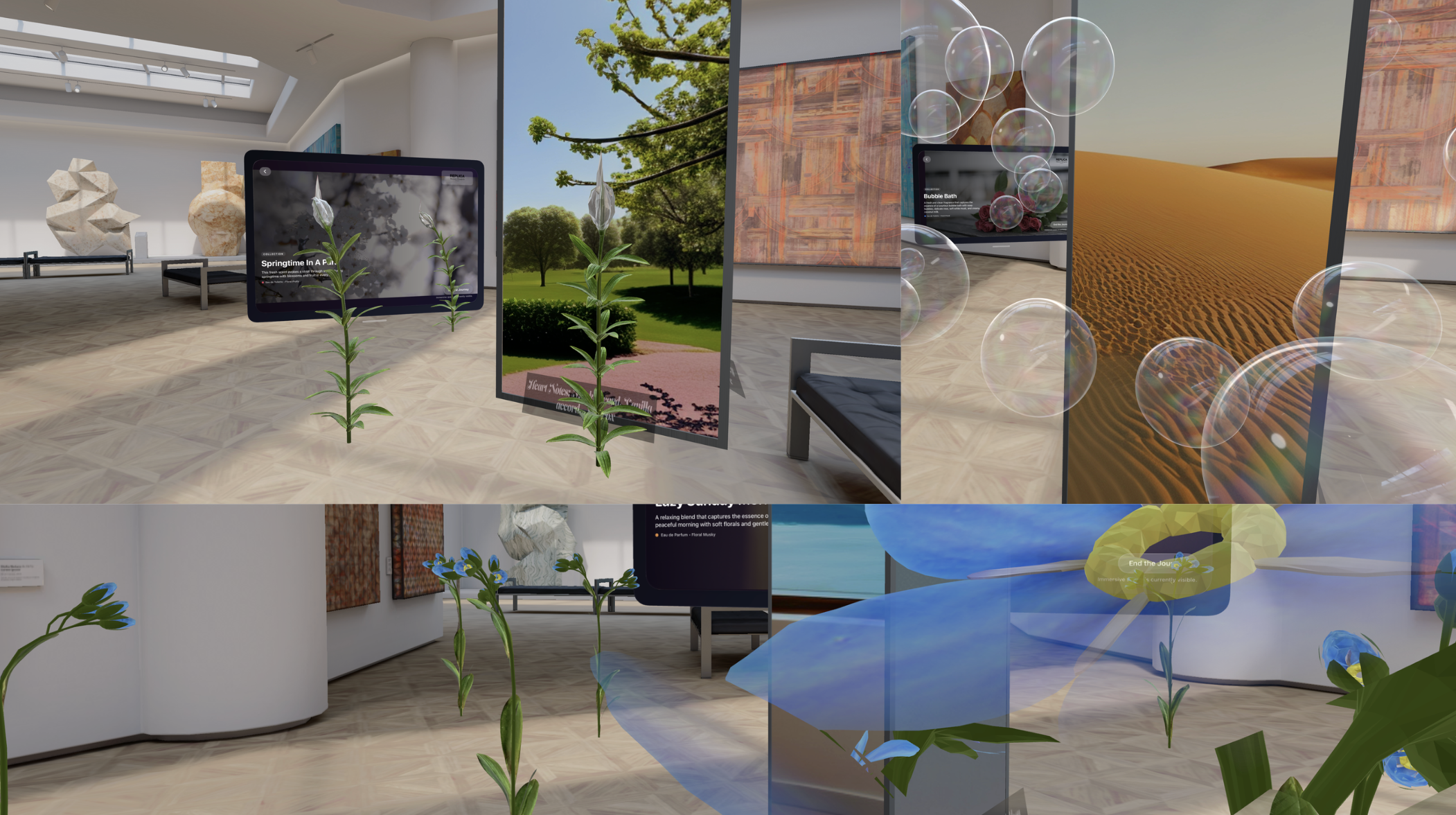

Immersive scenes

We built three core immersive scenes, each representing a distinct fragrance mood:

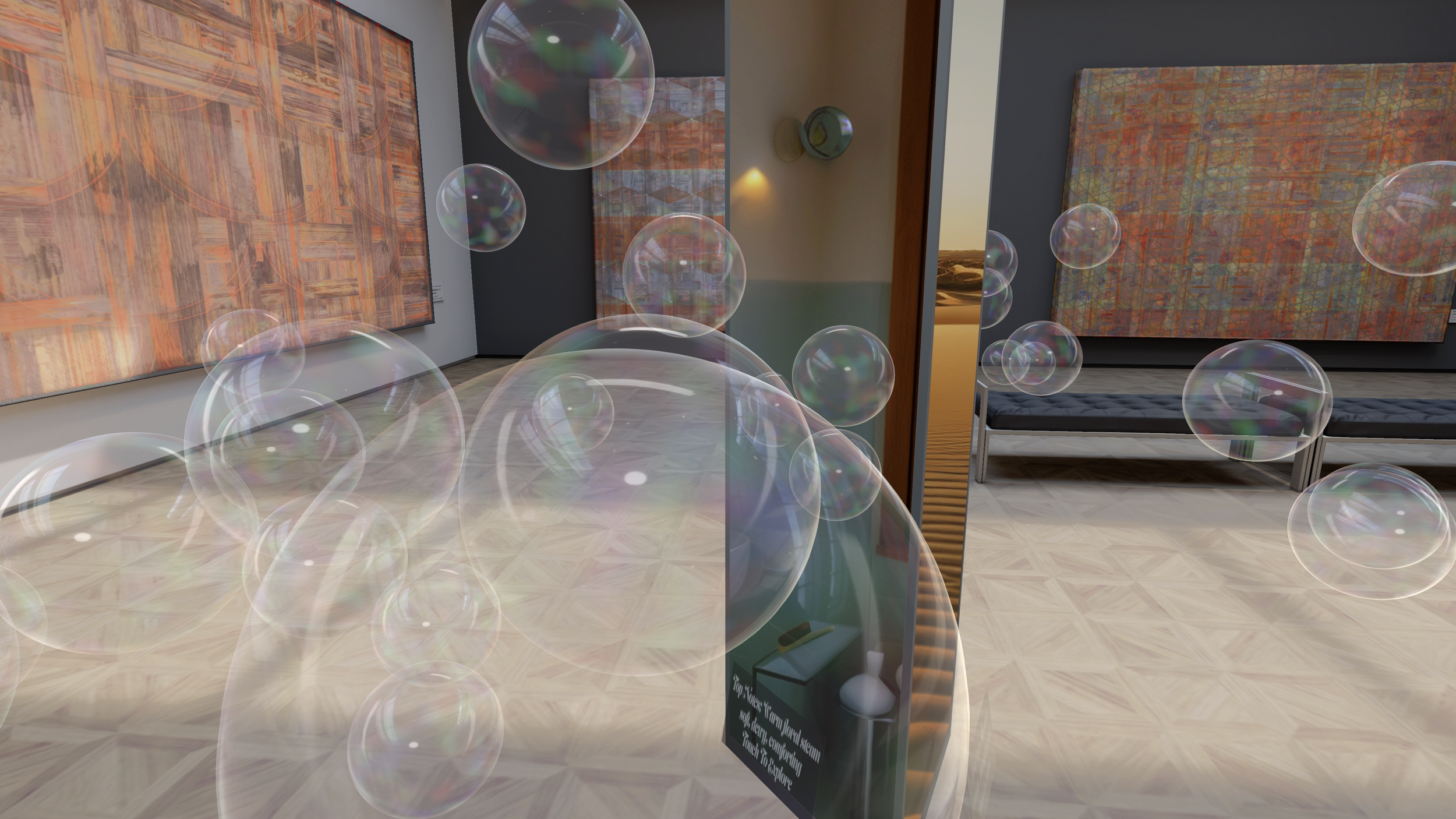

Bubble → Bubble Bath

A playful, weightless space that communicates comfort and freshness through floating forms and soft motion.

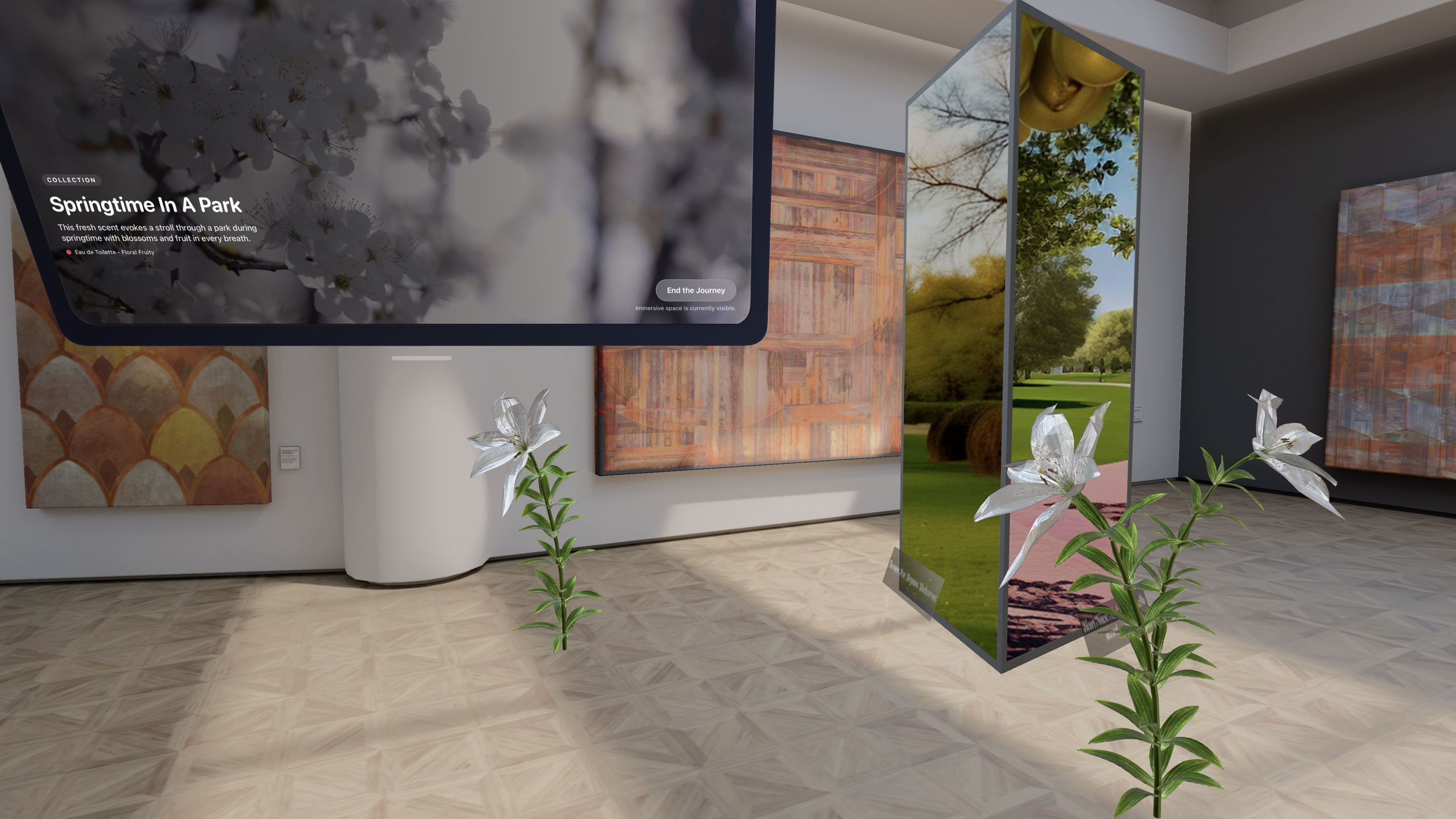

Lily → Springtime in a Park

A bright floral environment with blooming cues, sunlight warmth, and gentle spatial openness—built to feel alive and optimistic.

Blue Iris → Lazy Sunday Morning

A quiet, intimate atmosphere—cooler tones, softer pacing, and calm spatial density to express clean, private comfort.

Concept / Experience

Scent via Story

ScentSync redefines how we explore fragrance, transforming scent into a story you can step inside.

Through mixed reality and emotion-driven design, each experience merges scent, light, and sound into a shared moment of reflection and creativity. This experience isn’t about selling fragrance; it’s about feeling it.

Using mixed reality, users are transported emotionally, beyond just scent, into memory, mood, and environment. The experience unfolds in ‘third spaces’ like museums, pop-ups, lounges, art installations, fashion parties. The goal is to evoke feeling and connection through sensory storytelling.

-

Interactive fragrance notes

Fragrance notes are represented by 3D metaphors users can touch and explore. This turns a passive description into a physical moment.

Scene-based storytelling

Each scent becomes a narrative environment. Lighting, timing, sound, and animation work together to make the mood legible.

Seamless immersive transitions

Users can shift between scenes smoothly, allowing comparison across scents without breaking immersion.

Ambient sound + voice narration

Audio supports emotional interpretation, strengthening memory and improving clarity for first-time fragrance exploration.

-

Because this was an immersive system, the “design” was inseparable from the technical workflow. My process followed a loop:

1) Translate narrative into spatial composition

We started by converting fragrance identity into:

mood keywords

environmental metaphors (light, texture, space)

interaction targets (what users touch / trigger)

2) Prototype quickly in 3D

I blocked scenes in Blender using low-poly placeholders first:

to validate composition and readability

to estimate scale and spacing

to identify where animation mattered most

3) Export and validate in visionOS early

XR production is full of hidden constraints. The most important decision we made was testing early inside the actual headset so we didn’t overbuild assets that wouldn’t survive export/performance constraints.

4) Iterate for clarity + stability

Each build pass optimized:

animation reliability

object hierarchy

scene density and performance

interaction responsiveness

A major challenge: Geometry Nodes → Rigging pivot

One of the most defining moments of the project was discovering that my early animation approach wouldn’t ship.

What I tried

I originally designed a blooming flower effect using Blender geometry nodes, which produced beautiful procedural motion.

What broke

When exporting to USD / USDZ for visionOS, this method did not reliably preserve the animation behavior. Visual fidelity degraded and the animation became unpredictable.

The pivot

After extensive testing and debugging, I made a key decision:

Switch from procedural geometry nodes → skeletal rigging workflow

Why it worked

Rigging allowed:

consistent export behavior,

controllable animation timing,

and better runtime performance inside the headset.

This pivot was a critical shift from “looks good in Blender” to “works in the real product.”

-

Over multiple build cycles, I prioritized improvements that affected user perception most:

Immersion polish

smoother transitions between scenes

more readable object placement and spacing

improved lighting softness to reduce harshness in headset

Interaction clarity

simplified interaction moments to avoid confusion

ensured objects had clear affordances (what is interactive vs decorative)

Performance and stability

reduced scene complexity where needed

optimized asset scale and animation curves

maintained stable headset performance for demo conditions

Outcome

ScentSync culminated in a working immersive visionOS demo that translates fragrance identity into interactive spatial storytelling. The final experience features three distinct fragrance worlds, each designed with its own mood-driven environment, symbolic 3D note elements, and narration-supported pacing. By combining visual metaphor, interaction, and sound, the project demonstrates how spatial computing can bridge the gap between abstract fragrance descriptions and emotional understanding. Beyond the prototype itself, ScentSync serves as a proof-of-concept for how XR can enhance product discovery by making intangible qualities—like scent—more memorable, legible, and experiential.

Next steps

If extended into a more complete product experience, the next iteration of ScentSync would focus on turning exploration into personalization and long-term engagement. I would add user-facing features such as saving favorite scents, tagging moods, and building collections, enabling users to reflect on what they like and why. I would also develop a comparison mode that supports quick scene switching with memory cues to help users distinguish subtle differences between fragrances. On the immersive side, I’d expand interaction depth with more nuanced micro-interactions per note type, and introduce accessibility controls (audio intensity, brightness, motion pacing) to reduce sensory overload. Ultimately, the goal would be to evolve ScentSync from an immersive demo into a scalable fragrance discovery tool that could integrate retail product information and support real-world buying decisions.